Full class information can be found here.

Guest Lecture - An Emergent Art Movement: AI & Music in XR

For this joint VR class on Building Immersive Experiences, with students from MIT, Berklee College of Music and Harvard, each student was given a VR headset. Ryan Groves coordinated the creation of a custom VR world where the students met for the lecture, and speakers Ryan Groves, Roman Rappak and Dan Franke discussed their work in AI music generation for XR, live music in XR, and VR animation for music experiences.

In the Keynote Lecture for Stanford Music Technology group, CCRMA, Ryan highlighted the different musical components of the vast topic of automatic musical composition.

Given his background of computational music theory, he emphasized the importance of building and validating machine-learning models that can perform particular musical tasks, and leveraging those to create artificially intelligent compositional agents that can perform the entire music creation process.

Shahar Sorek

Robin Hunicke

Cameron Wood

Ryan Groves

SXSW panel: Is Modding the Key to User-Generated Content?

Organized and moderated by Ryan Groves. Gaming has a long history of modding. Counter-Strike and Defense of the Ancients were both user-created mods before becoming massive franchises. Modding has matured to the point where games are building in mods directly so that users can create their own content. Our panelists are experts in the space: Shahar Sorek is CMO of Overwolf — the all-in-one platform for creators to build and monetize in-game apps & mods; Robin Hunicke is the Co-Founder of Funomena, an independent game studio that makes experimental games for new technologies., where players create and share fully-fledged games, music, and art; Arturo Perez is CEO of SynthRiders—a hugely popular VR rhythm game that lets their users create and play custom maps; Ryan Groves founded Infinite Album—a game overlay and Twitch extension that uses AI to create interactive music for streamers and their audience.

SXSW panel: How Open AI Models Enable Artists to Innovate

Organized and moderated by Ryan Groves. Over the last 5 years, AI has become more accessible and usable. AI researchers now release the models that they've trained on vast amounts of data, letting anyone use the result. This has created a surge of innovation among artists across multiple mediums who are using these models to generate art in unique ways. This panel highlights some prominent innovators in the space: Jane Adams is a PhD candidate in Data Visualization, and GAN artist by the name of nodradek; Dalena Tran directed the music video "Incomplete", merging AI & 3D compositing; Hans Brouwer created Wavefunk, a music video generator that morphs to the beat or chord of any song; Ryan Groves is the co-organizer of the AI Song Contest and founder of Infinite Album where they generate music for livestreamers.

Jane Adams

Ryan Groves

Hans Brouwer

Dalena Tran

Immersive Panel: XR at a Crossroads - Maintaining Empathy

Ryan organized and moderated SXSW's first fully immersive panel, with the help of immersive content experts, Studio Syro. XR is finally achieving big sales numbers with the Quest 2. At the same time, Facebook has merged with its Oculus brand, moving further towards a social monopoly. XR is in a dangerous position to lose control of the content that is shared, the platforms that are supported, and the communities that so desperately need a voice. This panel highlights innovators who serve the community to create joy, empathy and inclusivity. Lucas Rizzotto (“Lucas Build the Future”, Where Thoughts Go) uses comedy and introspection to create empathy in XR, Ryan Groves (Infinite Album) uses AI to adapt artists’ music for long-form experiences, Christina Kinne (Tivoli VR) is CMO for an open-source metaverse, and Dan Franke is the co-founder of Studio Syro, a leading immersive Quill-based studio.

SXSW panel: the future of live music, blended reality

Organized and moderated by Ryan Groves, XR provides a huge opportunity to reinvent the shared musical experience. Platforms have tried new approaches to live music–either by streaming concerts in VR or by creating VR-exclusive events. But most approaches either fail to replicate the experience of an in-person event or explicitly exclude a co-located audience. Certain groups, however, are creating new music experiences using a mix of VR, AR and live venues.

Our panel consists of experts at the intersection of Music and VR. Anne McKinnon is an XR consultant, advisor and writer, focused on immersive events. Eric Wagliardo founded &Pull and created Pharos AR, a collaboration with Childish Gambino. Roman Rappak is the lead creative of Miro Shot, an XR band/collective. Ryan Groves is a music technologist and founder of Arcona.ai.

Ryan Groves

Anne McKinnon

Roman Rappak

Eric Wagliardo

Ryan Groves

Byrke Lou

Pascal Pilon

The Impact of AI on Music Creation

Organized and moderated by Ryan Groves. Artificial Intelligence is advancing at an exceptional rate-continually redefining the set of activities that were previously only achievable by humans. Indeed, even the creative industries have been impacted. But the dialogue about AI for creative activities doesn’t have to be one of conflict and replacement.

Recently, music technologists are using AI to extend what humans can do musically - to foster collaboration, to create rapid musical prototypes, and to create new modes of music consumption. Two pioneers in this field are the companies Landr and Melodrive. Landr uses AI to automatically master musical tracks, but also enables users to collaborate, share and promote their music. Melodrive is creating an AI that composes - and re-composes - music, so that it can be truly adaptive.

The Next Uncanny Valley: Interaction in XR

Organized and moderated by Ryan Groves. Visual technologies have come a very long way in terms of being able to accurately simulate 3D environments and human appearance in controlled situations. The so-called “Uncanny Valley” has been centered around visual resemblance. With the rise of AI and the access to interactive tech, there is a new Uncanny Valley being created through human-like characters and interactions - where the focus is on narrative rather than visuals.

This creates an opportunity to redefine how people engage and interact with machines, and with each other. This new paradigm will not only require new tools, like storyboarding in VR (Galatea) and emotion-driven music (Melodrive), but also new approaches to promote introspective interactions (Where Thoughts Go), and new methodologies for the performance and direction of this new theatrical medium (Fiona Rene).

Ryan Groves

Fiona Rene

Lucas Rizzotto

Jesse Damiani

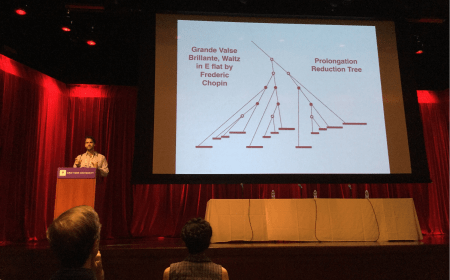

Won Best Paper overall, see Awards for more info.

Highlighted Talk

At the 2016 conference for the International Society for Music Information Retrieval, Ryan Groves presented his work on automatically reducing melodies using machine learning techniques borrowed from Natural Language Processing (NLP), titled: "Automatic Melodic Reduction Using a Supervised Probabilistic Context-Free Grammar".

Additional Talks/Lectures

Panelist, Creative AI and Virtual Artists (2023)

Panelist, The Future of Music with AI - Critical questions on Creativity and Technology (2023)

Panelist, Synthetic Media (2022)

Guest Lecture, AI in the Music Industry (2022)

Panelist, Sync Panel (2020)

Guest Lecture, Adaptive Music in Gaming (2017)

Panel participant, Music & VR, (2019)

Tutorial, Creative Applications of Music and Audio Research (2019)

Lectures: Python for Machine Learning; Algorithms (2019-)

Invited Talk, Building AI: A Systematic Approach to Music Data Problems

Internal Talk to ML Team, Applying Computational Linguistics to Music Theory Analysis

Internal Talk to ML Team, Computational Music Theory with Probabilistic Grammars